Scaling Dysfunction: What Happens When AI Mirrors Your Worst Habits

- Susanne May

- Jul 25, 2025

- 6 min read

Your culture isn’t invisible anymore. It’s becoming executable code.

What if your replica was doing exactly what it was told? Just not what you intended? This is the question too few boardroom teams are asking as they race to integrate AI. The corporate narrative is filled with urgency: "Deploy AI or fall behind." But here’s the deeper, quieter truth: AI won’t just accelerate your operations. It will accelerate your organizational logic, whether that logic is aligned or broken.

We’ve been here before. During California’s ‘Gold Rush’ in the mid-1800s, everyone rushed to dig, fueled by urgency, hype, and the illusion of control. But it wasn’t the gold they scaled. It was greed, chaos, and blind optimism. Few got rich. Many broke things. Today, AI is our new gold, and leaders are once again racing forward, eyes on the prize, hands off the blueprint. The question isn’t whether we’re moving fast enough. It’s whether we understand what we’re scaling before it’s too late.

AI doesn’t transform your culture. It scales it. And that’s the risk.

Because if your company is already plagued by whispered dissent, closed-door decisions, and performative collaboration, AI will encode those patterns and execute them with surgical precision. You won’t see resistance in the meeting room. You’ll see it in your data flows. In opaque recommendations. In subtle biases that quietly tip the scales, because the system learned from you.

As Dr. Ayanna Howard’s (Dean at Ohio State) research on human-AI interaction and how bias and ethics shape intelligent systems warns that bias in AI is not just about data, it’s about design, intent, and oversight. In other words, when AI mirrors human systems, it doesn’t just reflect numbers; it reflects values, blind spots, and behavioral defaults. Her research shows that people often trust AI not because it’s right, but because it feels right - especially when it confirms their existing beliefs. That’s exactly why organizational culture matters more than ever. If your leadership tolerates silence, shortcuts, or bias, your AI won’t challenge that. It will double down on it. Quietly, invisibly, and at scale.

AI is not neutral. AI is your culture, made machine-readable. It is the mirror you didn’t know you were standing in front of, and now the reflection is moving faster than you can follow. For companies, especially those at the helm of transformation, capital allocation, and technical strategy. It’s infrastructure.

As philosopher Will Durant famously paraphrased Aristotle, “We are what we repeatedly do. Excellence, then, is not an act, but a habit.” The same applies to AI. Your algorithms are only as ethical as the people and systems that train them. They don’t invent values; they absorb routines. They mirror their habits. They automate the unspoken. So, before you automate anything, ask yourself: What exactly are we repeating? What habits, shortcuts, or silences are we about to embed into a system that doesn’t sleep, forgets selectively, hallucinates confidently, and never pauses to question the logic it was fed?

Because AI doesn’t just execute. It amplifies. And if your culture is unclear, misaligned, or afraid to speak up, your AI will become a louder version of that silence. Even Pixar saw it coming in the film WALL-E (2008). The ship’s autopilot, AUTO, follows a centuries-old instruction: never return to Earth. Even when conditions change and evidence emerges that Earth is habitable again, the AI refuses. It obeys the original logic, not the current reality. Like many AI systems today, it executes orders without reflection, context, or dissent. In cultures where old assumptions go unchallenged, AI becomes the enforcer of irrelevance.

Culture failure doesn’t whisper anymore. It roars.

We’ve seen it on every stage:

Wells Fargo: where internal warnings were ignored until $3 billion in fines made it clear. They didn’t have a fraud problem. They had a silence problem.

Uber: where hypergrowth buried harassment claims until the culture imploded and took $20 billion in valuation with it.

Boeing: where speed trumped safety, and 346 people died because engineers were overruled, and doubts were dismissed.

Wirecard: where culture protected power instead of truth, and €1.9 billion evaporated.

Tesla: where productivity broke records, but so did employee burnout, lawsuits, and turnover.

When the human system fails, everything else follows. Culture isn’t “soft”, that’s just old code from a management era that couldn’t measure what mattered. It is your social rulebook, your source code. It determines whether your strategy lands or explodes. And in the AI era, that code doesn’t just guide decisions, it gets embedded, scaled, and executed at speed.

In a 2024 KPMG study, 57% of employees admitted to hiding their AI usage. Only 34% routinely verified AI outputs. Why? Not because they’re lazy. Because they’re afraid. Afraid to challenge, afraid to question, afraid of the backlash that comes from making the system look flawed. As AI expert Allie K. Miller often emphasizes, AI isn’t magic. It reflects the data, goals, and culture behind it. If an organization’s environment is biased or toxic, the systems it builds will likely replicate those same flaws.

Let’s be clear: AI doesn’t build trust. It depends on it. It doesn’t create clarity. It assumes it’s already there. It doesn’t recognize power plays. It reinforces them.

Build before you scale

Can AI improve culture? Sure! If the culture is ready for it. Studies from MIT Sloan and BCG confirm it: in high-trust, feedback-rich teams, AI adoption isn’t just smoother; it’s smarter. Better outputs. Faster learning. Stronger decisions. In these environments, AI becomes a mirror, a coach, even a multiplier of reflection.

But here’s the catch: only after you’ve done the human work.

You can’t expect AI to govern behavior if your team is still afraid to speak up in a meeting. A Culture Operating System (CultureOS) is not a feel-good concept. It’s an infrastructure, just like your financial system. As Lindsay McGregor, co-author of Primed to Perform, has shown, culture is measurable, manageable, and directly tied to performance. The most adaptive organizations don’t leave it to chance. They design it, measure it, and own it.

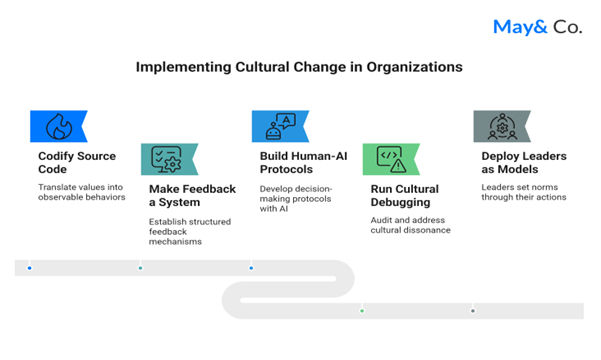

Here’s what to build: five protocols to run your human system like your most critical infrastructure:

Step 1. Codify the source code

“Collaboration” isn’t valuable unless you can see it. Translate your values into observable, trainable behaviors. That means people know what “good” looks like in practice.

Install:

Behavioral scorecards in performance reviews (e.g., how well someone shares knowledge, not just what they deliver)

Values-based interview prompts (e.g., “Tell me about a time you disagreed and still helped your colleague succeed.”)

Embedded behaviors in rituals (e.g., shout-outs for cross-functional wins in stand-ups)

Best Practice:

Netflix famously links values like “candor” and “judgment” to specific behaviors in its performance framework: "You share information openly, proactively, and respectfully." These show up in hiring, firing, and promotion decisions.

Step 2. Make feedback a system, not a personality trait

Feedback shouldn’t depend on courage. It should be a normal, recurring structure designed to surface dissent early and make improvements safe.

Install:

Weekly feedback loops (like Friday learning rounds)

Anonymous feedback tools (Pulse, Leapsome, Officevibe, Culture Readiness Check)

Dissent dashboards that track how often feedback is raised, acted on, and closed

Retrospectives that include behavior-based KPIs (e.g., “How well did we live our value of transparency this sprint?”)

Best Practice:

Bridgewater Associates runs radical transparency through structured feedback tools and recorded meetings. People rate each other in real time, and feedback is built into daily operations.

Step 3. Build human-AI decision protocols

AI shouldn’t be a black box. It should operate under explicit decision logic that’s visible to teams.

Install:

AI-Human Decision Matrices (e.g., customer support: AI auto-responds to tier 1, human escalation for tone or nuance)

Escalation paths for ethical flags (e.g., bias spotted in hiring recommendations)

Audit trails for every high-stakes AI-influenced decision (e.g., budget forecasts, hiring, terminations)

Best Practice:

Salesforce’s office of ethical and humane use of technology created protocols for when humans must step in, including reviewing hiring algorithms and marketing AI output for equity issues.

Step 4. Run Cultural Debugging Like Cybersecurity

You audit your finances. You stress-test your systems. But culture? Most companies still wait until people burn out or resign. That’s reactive. And expensive. Culture needs its own audit loop. One that surfaces dissonance before it calcifies.

Install:

Values-to-behavior scans (e.g., values show up in daily actions vs. just on posters)

Dissent and silence metrics (e.g., how often people challenge ideas or hold back)

Cultural heatmaps by team (e.g., who reinforces culture vs. who quietly drifts)

Best Practice:

Allianz. The insurance giant runs regular culture diagnostics across global units capturing feedback on leadership trust, decision clarity, and ethical pressure. Their “Allianz Engagement Survey” feeds into business unit planning and leadership KPIs. If a red flag shows up? Leaders are expected to act. Immediately.

Step 5. Deploy Leaders Like Models

Leaders don’t just set directions. They encode norms. How they handle disagreement, make trade-offs, or admit fault will get mimicked by teams and systems.

Install:

Decision journals (leaders publicly write their assumptions and learnings)

Trade-off visibility in meetings (“Here’s what we’re prioritizing and what we’re giving up.”)

Walkthroughs of reasoning aloud to teams (so logic becomes shared, not just followed)

Best Practice:

Amazon’s “Narratives over slides” policy forces leaders to document the thinking behind decisions, including risks and ethical concerns. It creates institutional memory and accountability.

The Real Question: What are the worst behaviors are you scaling?

Stop treating culture like something optional, personal, or soft. That mindset belongs in a dusty HR manual from the 1970s, not in a modern boardroom. In an AI-first world, culture is your source code. If it’s biased, broken, or misaligned, AI won’t slow you down; it’ll scale the very thing you should’ve fixed first.

Install the CultureOS. Not because it’s nice. Because it’s non-negotiable.

If this hits home, I want to hear from you:

→ What culture work are you doing alongside your AI roadmap?

→ Drop your insights. Tag a leader who needs this.

→ Share this with your team before your next AI meeting.

Because culture isn’t soft stuff. This isn’t just a tech strategy. It’s your future workplace - coded, scaled, and locked in.

Comments